Fencing is a vital component in a virtualization cluster; when a cluster member fails it must be inhibited to access shared resources such as network disks or SAN, so that any virtual machine still running on it could be restarted on other members, being sure that no data will be corrupted because of simultaneous access.

Many methods exist to fence failed cluster members, mostly based on powering them off or on disconnecting their network cards; here I would like to show how to use network fencing on a Linux cluster environment (Cman based), using the fence_ifmib against a Cisco managed switch.

The logic behind this mechanism is very simple: once a node has been marked as dead the agent uses the SNMP SET method to tell the managed switch to shut the ports down.

Cisco switch SNMP view setup

Only two OIDs are needed by the agent: ifDescr and ifAdminStatus. The first is used to match the interface name used on the Cisco device with the one provided in the cluster configuration, the latter to get/set the port status.

On the Cisco switch side a simple SNMP setup is required to expose only a small part of the configuration, limited to the only interfaces used by the cluster nodes:

interface GigabitEthernet0/1 description NODE1 interface GigabitEthernet0/2 description NODE2 ... ip access-list standard MYCLUSTER_accesslist permit 10.0.0.1 permit 10.0.0.2 deny any ... snmp-server view MYCLUSTER_view ifDescr.10101 included snmp-server view MYCLUSTER_view ifDescr.10102 included snmp-server view MYCLUSTER_view ifAdminStatus.10101 included snmp-server view MYCLUSTER_view ifAdminStatus.10102 included snmp-server community MYCLUSTER_community view MYCLUSTER_view RW MYCLUSTER_accesslist

Interfaces GigabitEthernet0/1 and GigabitEthernet0/2 are connected to the cluster nodes NODE1 and NODE2. The two nodes have IP addresses 10.0.0.1 and 10.0.0.2; these addresses added to the MYCLUSTER_accesslist ACL in order to be authorized to access the SNMP engine. Be sure to authorize every IP used by the cluster so that whatever the node that invokes the fencing it would be allowed to do so. Then a new SNMP view is defined, named MYCLUSTER_view; this SNMP view contains only the ifDescr and ifAdminStatus OIDs of the nodes’ interface (they have index 10101 and 10102, as shown by the show snmp mib ifmib ifindex GigabitEthernet0/x command). Finally, everything is assembled in the MYCLUSTER_community and authorized with read&write (RW) permissions.

Once done, you can test it using the snmpwalk command (suppose the switch has IP 10.0.0.100):

root@NODE1:~# snmpwalk -v 2c -c MYCLUSTER_community 10.0.0.100

iso.3.6.1.2.1.2.2.1.2.10101 = STRING: "GigabitEthernet0/1"

iso.3.6.1.2.1.2.2.1.2.10102 = STRING: "GigabitEthernet0/2"

iso.3.6.1.2.1.2.2.1.7.10101 = INTEGER: 1

iso.3.6.1.2.1.2.2.1.7.10102 = INTEGER: 1

iso.3.6.1.2.1.2.2.1.7.10102 = No more variables left in this MIB View (It is past the end of the MIB tree)

Only the ifDescr (iso.3.6.1.2.1.2.2.1.2) and ifAdminStatus (iso.3.6.1.2.1.2.2.1.7) OIDs for the two enabled interfaces are shown.

Cluster configuration

On the cluster side a simple configuration is needed:

<fencedevices>

<fencedevice agent="fence_ifmib" community="MYCLUSTER_community"

ipaddr="10.0.0.100" name="fence_ifmib_MYSWITCH" snmp_version="2c"/>

</fencedevices>

<clusternodes>

<clusternode name="NODE1" nodeid="1" votes="1">

<fence>

<method name="1">

<device name="fence_ifmib_MYSWITCH" action="off" port="GigabitEthernet0/1"/>

</method>

</fence>

</clusternode>

<clusternode name="NODE2" nodeid="2" votes="1">

<fence>

<method name="1">

<device name="fence_ifmib_MYSWITCH" action="off" port="GigabitEthernet0/2"/>

</method>

</fence>

</clusternode>

</clusternodes>

How to edit and apply the configuration is out of the scope of this post, it depends on the cluster implementation. You could certainly find more information on your specific platform guide (for example, Configuruing Fencing on ProxMox Two-Node High Availability Cluster, or 2-Node Red Hat KVM Cluster Tutorial or Red Hat Enterprise Linux 6 – Cluster Administration).

To test the final solution you can use the fence_node program against a cluster node (be careful, if everything works fine the node will be cutted out):

root@NODE2:~# fence_node NODE1 -vv fence NODE1 dev 0.0 agent fence_ifmib result: success agent args: action=off port=GigabitEthernet0/1 nodename=NODE1 agent=fence_ifmib community=MYCLUSTER_community ipaddr=10.0.0.100 snmp_version=2c fence NODE1 success

Multiple NICs connected to multiple switches

If a node had more than one network interface connected to multiple switches for the sake of redundancy a simple addition to the cluster configuration would extend fencing to the additional ports.

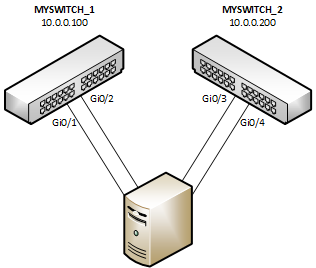

For example, consider a node with 4 NICs, a pair connected to MYSWITCH_1 (10.0.0.100) on ports GigabitEthernet0/1 and 0/2, and the other two to MYSWITCH_2 (10.0.0.200) on ports GigabigEthernet0/3 and 0/4:

A node with 4 NICs connected to 2 switches

The following cluster configuration would cut the node out in case of fencing:

<fencedevices>

<fencedevice agent="fence_ifmib" community="MYCLUSTER_community"

ipaddr="10.0.0.100" name="fence_ifmib_MYSWITCH_1" snmp_version="2c"/>

<fencedevice agent="fence_ifmib" community="MYCLUSTER_community"

ipaddr="10.0.0.200" name="fence_ifmib_MYSWITCH_2" snmp_version="2c"/>

</fencedevices>

<clusternodes>

<clusternode name="NODE1" nodeid="1" votes="1">

<fence>

<method name="1">

<device name="fence_ifmib_MYSWITCH_1" action="off" port="GigabitEthernet0/1"/>

<device name="fence_ifmib_MYSWITCH_1" action="off" port="GigabitEthernet0/2"/>

<device name="fence_ifmib_MYSWITCH_2" action="off" port="GigabitEthernet0/3"/>

<device name="fence_ifmib_MYSWITCH_2" action="off" port="GigabitEthernet0/4"/>

</method>

</fence>

</clusternode>

<clusternodes>

References

“Cisco IOS – Configuring SNMP Support”, Cisco.com: http://www.cisco.com/en/US/docs/ios/12_2/configfun/configuration/guide/fcf014.html#wp1001150

“fence_ifmib – Linux man page”, die.net: http://linux.die.net/man/8/fence_ifmib

“fenced – Linux man page”, die.net: http://linux.die.net/man/8/fenced

Latest posts by Pier Carlo Chiodi (see all)

- Good MANRS for IXPs route servers made easier - 11 December 2020

- Route server feature-rich and automatic configuration - 13 February 2017

- Large BGP Communities playground - 15 September 2016

hi,

I am using a cisco SG500 28 small business L3 switch.

I have configured everything as you have done it here, but for some reason I am not able to see the OIDs when I issue the SNMPwalk command.

more detail here – https://supportforums.cisco.com/message/4172904#4172904

any ideas?